Deep Dive

Autonomous Driving

Autonomous driving will bring numerous social, environmental and economic benefits. It will make our roads safer, traffic more efficient, save people valuable time, and can help create affordable mobility concepts for everyone. Allowing a better capacity utilization, it can reduce both the number of cars and the number of parking spaces needed in city centers, which also saves resources.

Compared to e-mobility, the developments in the autonomous driving sector are still at an early stage, and there are still a number of challenges to be solved before they are fully ready for the market. The current players on the market are at different stages of autonomy and in some cases are pursuing different approaches.

Waymo, which is part of Google, is already operating a Level 4 autonomous test system in Arizona, in which an autonomous vehicle transports people in a defined area of 130 square kilometers. For this area, a high-resolution three-dimensional map of the surroundings has been created, showing all roads, traffic lights and signs. Waymo uses lidar sensors in addition to cameras and radar sensors to detect the environment and enable autonomous driving.

Chinese player BaiDu is taking a similar approach with its “Apollo” project, using three sensor systems: camera, radar and lidar. BaiDu currently operates 10 “Apollo Go” vehicles in a 3.1-square-kilometer covered area with 8 predefined destinations where users can book a ride for a flat fee of $4.60.

One of the most popular players in the field of autonomous driving is Tesla. Unlike the other providers, Tesla’s approach is based solely on computer vision with cameras, which reduces the number of sensors needed. Recently, Tesla has started to deliver new cars without radar sensors.

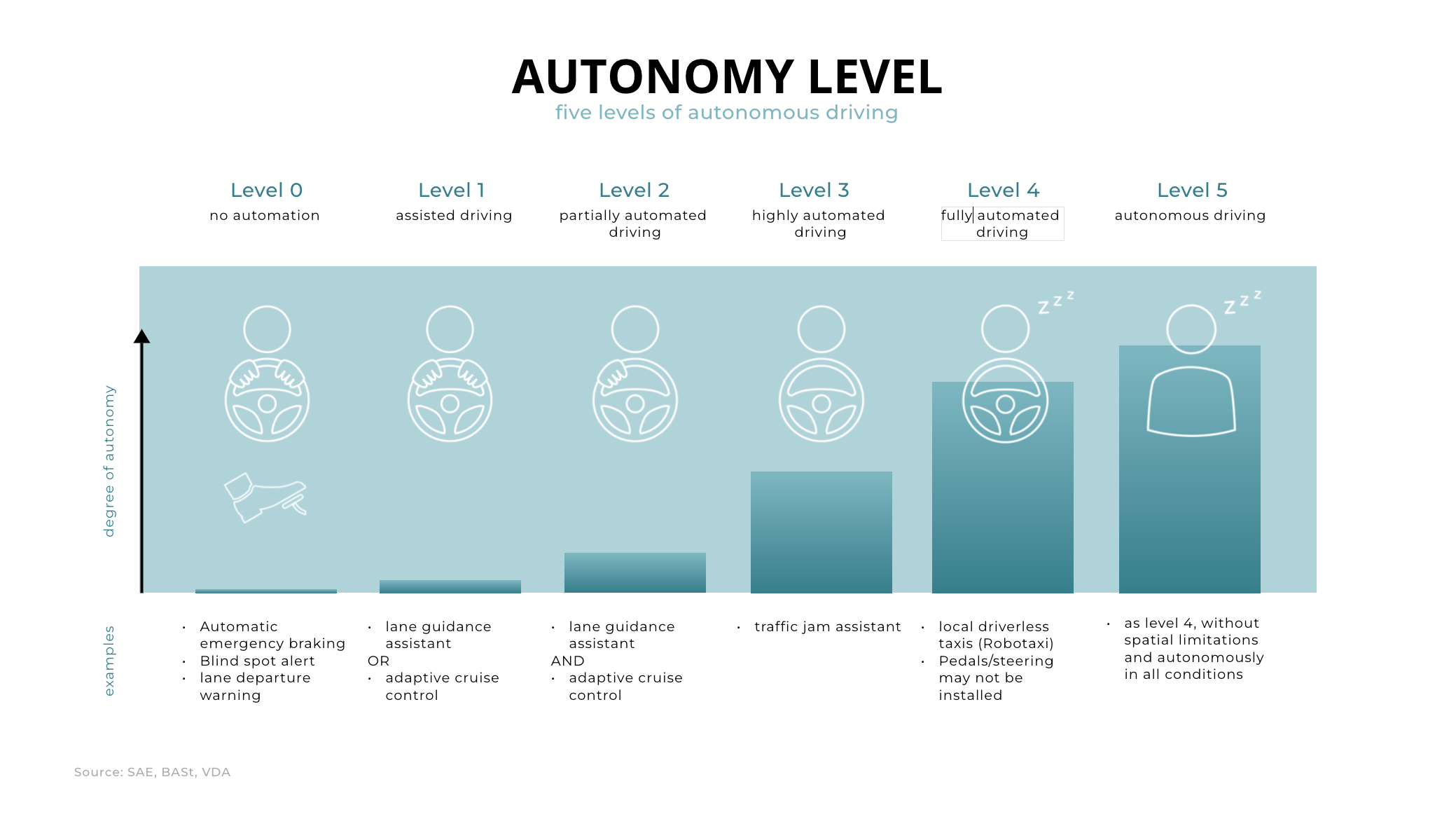

The path to full autonomy

The full market readiness for autonomy level 5 depends on two critical factors: technological maturity and regulatory approval. Tesla is taking a data-driven approach to seeking approval. The company is doing this by comparing accident data collected through its system with Autopilot enabled and with Autopilot disabled. In the fourth quarter of 2021, Tesla’s vehicles with Autopilot enabled were involved in an accident every 4.3 million miles on average. For those with Autopilot disabled but active driver assistance features, the average distance per accident was 1.6 million miles. If none of the technical features offered were used, there were only 484,000 accident-free miles. With this data, Tesla makes it clear that Level 2 of the autonomous driving system is already contributing to significantly greater safety on the road.

Current Level 2 and Level 3 driving assistance systems may be used on the condition that the driver can intervene at any time. He is required to make contact with the steering wheel at one-second intervals.

Autonomy level 4 does not require a driver to be on standby at all times. This makes it possible to use the driving time for sleeping, working or entertainment programs. In this scenario, the system is able to put itself in a safe state without human intervention. The driver is only called in the event of an emergency and can take control directly or remotely via a data link until the software can act independently again.

BCG expects 10 percent of new cars sold to have Level 4 autonomy features starting in 2035. According to McKinsey, Level 3 and 4 autonomy cars will reach the price level of non-autonomous vehicles in the context of mobility services between 2025 and 2027, increasing adoption and accelerating market penetration. Until the adaptation of level 5 autonomy, where no driver is needed at all, it will take a little longer in our estimation, not only from a technological and regulatory perspective, but also in terms of social acceptance.

Nevertheless, we do see a 10x potential in the market for autonomous driving. According to RethinkX, autonomous driving services will be ten times cheaper than today’s cabs in the medium term. Ark assumes even more optimistic cost reductions and expects that on a large scale, an autonomous robo-taxi ride will cost €0.13 per kilometer. The economic benefits to providers and consumers alone will be a strong driver in adaptation. ARK predicts that autonomous cabs will contribute more than $2 trillion to GDP by 2035 in the U.S. alone. With the advent of this technology, global transportation could triple by 2030 as demand increases due to falling costs. This will also increase the potential of autonomous driving.

In the following section, we use a number of hypotheses to explain which technology approach we believe will prevail, what the biggest technological challenges are at present, and how the competing players can differentiate themselves.

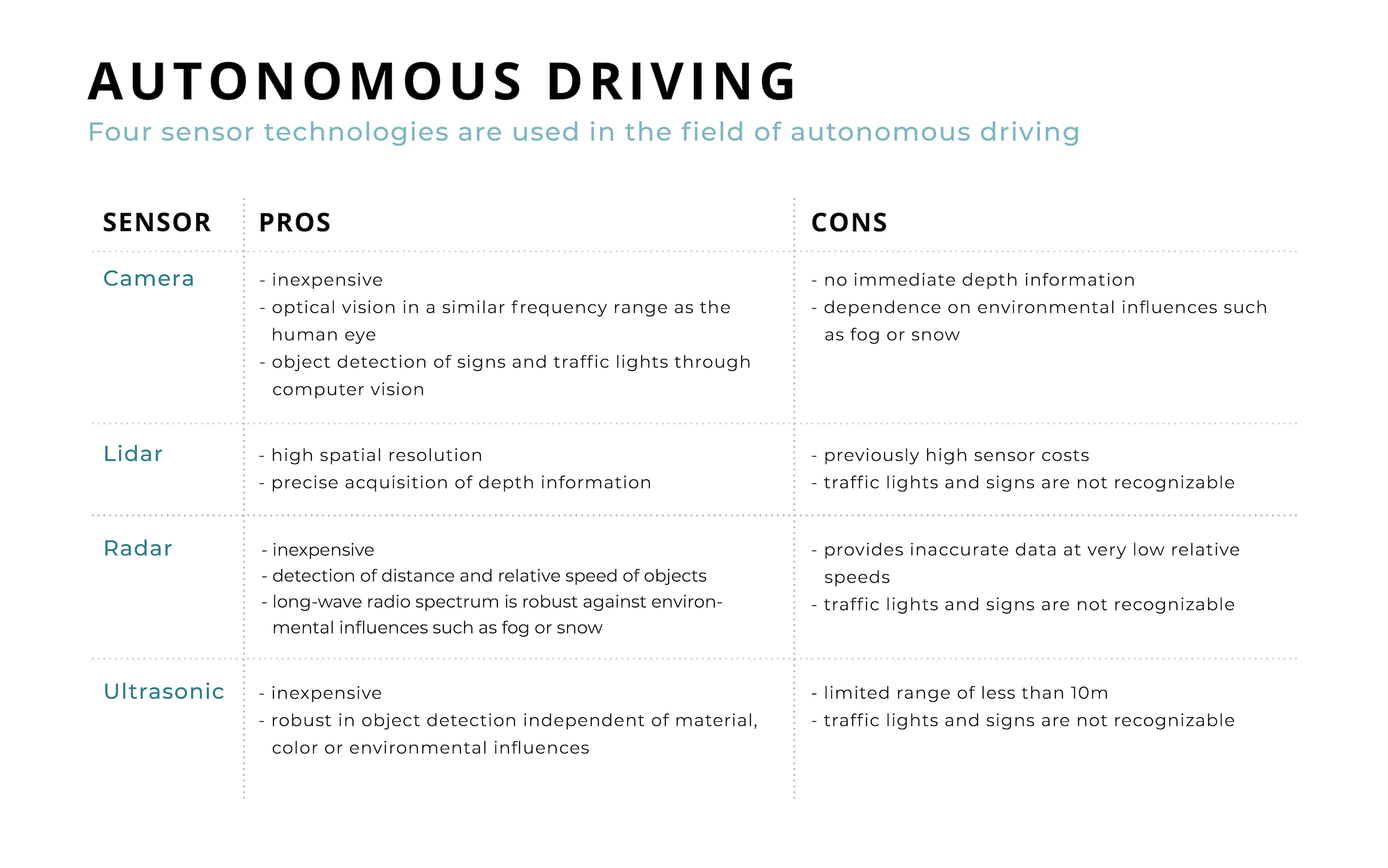

Hypotheses 1: In the medium term, focusing on the computer vision approach with multiple cameras as the main sensors may make sense, as evaluating multiple sensors in a software framework is currently the biggest challenge. In the long term, however, it is likely that input from sensors such as LIDAR and radar will also be needed to reliably map all edge case scenarios.

Currently, the most common way is to use multiple types of sensors. Companies such as Waymo or Baidu are pursuing this approach and equipping their cars with various cameras, lidar and radar sensors in order to be able to recognize objects and the environment. The challenge here is that in certain situations, different sensors provide conflicting information that must first be combined into a unified digital model. In the test areas of Waymo and Baidu, high-resolution lidar maps are also used to enable precise positioning of the vehicle. However, the creation of these maps is hardly scalable, as every environment, every road around the world would have to be driven through and all distances, road markings, traffic lights and the like would have to be recorded and regularly updated. The computer vision system with only one optical camera as the main sensor, on the other hand, can be used everywhere as soon as the existing technological challenges have been solved.

One of the biggest difficulties here is determining distances and depths without radar and LIDAR. Nevertheless, Tesla now relies on a pure computer vision approach for its models, using only cameras as input sensors. Therefore, Tesla will only deliver cars without radar in the future. According to Andrej Karpathy, Tesla Chief of AI, the deep learning algorithm of the computer vision approach is now so advanced that it would be hampered by radar data.

Prof. Dr. Daniel Cremers, Professor of Computer Science and Mathematics at the Chair of Computer Vision and Artificial Intelligence at the Technical University of Munich, who has been working in this field for more than 10 years, also considers computer vision to be the more sensible approach on the road to autonomous driving. We assume that with the computer vision approach alone, an autonomy level can be achieved that is 10 times more reliable than the human driver. Whether this is sufficient for Level 4 or Level 5 autonomy approval remains to be seen. If one wants to create an autonomous driving system that can map all edge-case scenarios, additional sensors such as radar and LIDAR will likely be needed. However, there is still a long way to go before software is available that can combine all this data without interference and reliably filter out the so-called “noise”.

Hypothesis 2: The key challenge is to develop a software system that can use sensor inputs to recognize its environment and make reliable predictions about possible movements of surrounding objects.

For a vehicle to drive fully autonomously, it must be able to recognize and classify the objects in its environment and make a prediction about their possible movements. This is not easy, because even objects with the same appearance have different movement patterns, for example vehicles parked at the side of the road. In addition, visibility conditions are constantly changing due to weather, light and surroundings. Also, the environment must not only be identified, but also contextualized. A stop sign on the road has a different meaning than one on a bus or under the arm of a construction worker. In order to evaluate the data sets from the sensors, a complex software system is needed - a kind of brain that combines the various sensor data into a common model and evaluates it. In software development, so-called deep neural networks are used for this purpose, among other things, which are intended to replicate the structures in the human brain and have made great progress since 2012.

In order to train the artificial brain, large amounts of training data are usually required. In Tesla’s vehicles, for example, this data is collected whenever the driver intervenes in an assistance system to prevent hazards or to avoid obstacles. To analyze the data and improve the artificial brain, Tesla has one of the fastest supercomputers in the world called “Dojo” and a specially developed AI chip that was developed explicitly for autonomous driving and is 21 times faster than the chip Tesla had previously purchased from Nvidia.

Hypothesis 3: An extensive and diverse data set from real-world driving to train the algorithm is a key point in Level 5 autonomy and a critical asset in the race for market leadership.

In road traffic, there are countless, diverse situations to which ad hoc reactions are required. A construction site, an animal on the road or an accident. While humans are cognitively capable of making the right decision in most cases, the autonomous driving system must have seen a similar situation before in a training data set and be able to deduce what behavior would be appropriate in the situation. These so-called edge case scenarios occur very rarely, so a huge amount of driving data is needed to capture them.

Today’s systems can reliably assess and evaluate most standard traffic situations. However, a Level 5 autonomous driving system must act correctly in 100 percent of the cases in which a human could act correctly and therefore needs appropriate data sets or abstraction methods for each edge case.

Tesla has a big data advantage here because each of its vehicles is capable of collecting driving data and transmitting it to its research and development center. Since selling the first version of Autopilot in 2015, Tesla has been collecting all relevant Autopilot driving data from now over 1 million cars sold. In April 2020, the company announced that it had collected 3 billion miles of autonomous driving data - a lead that is unlikely to be caught up.

In addition to data from the external sensors, Tesla also collects data on driver behavior, information about the position of their hands on the instruments and how they operate them. The collected data not only helps Tesla refine its systems, but also has tremendous value in itself. According to McKinsey, the market for data collected by vehicles is expected to reach a value of $750 billion per year by 2030.

Conclusion

Full autonomy in road transport will bring about the biggest change in mobility since the invention of the car. Although humanity as a whole will benefit enormously, these are challenging times for the established OEMs and the 800,000 employees in Germany alone. If European automotive companies and suppliers are unable to develop or gain access to the technology they need themselves, they will have a hard time in the car market of the future. In addition, some estimates predict that the vehicle fleet in circulation will fall by 80 percent. This can be attributed to higher utilization and expected changes in consumer behavior. We go into more detail in the article on shared mobility.

Crucial to solving the current challenges in autonomous driving is the right combination of sensors (hardware) and algorithms (software) that enable the car to reliably recognize its environment and make a prediction about its behavior. We consider the computer vision approach pursued by Tesla, among others, to be the right path toward a globally scalable solution in the medium term. The data sets needed to train the software are one of the most valuable assets of the future.